This project is currently on hold due to personal circumstances.

Development is expected to resume after December 6, 2025.

Join Us

Scan the QR code to connect to IJAI.

| Directory | Purpose | Notes |

|---|---|---|

App/ |

Main Electron application — UI, launch, and packaging. | Build/dev scripts: npm run build, npm run dev. |

IJAI-configs/ |

Assistant and model configuration files (profiles, launch parameters). | Keep example configs under examples/. |

dataset/ |

Datasets for LLM training/fine-tuning, corpora for STT, collections for TTS. | Specify format and license next to each dataset. |

models/ |

Bundled models and installers (GGUF, ONNX, etc.). | Add a README inside each model folder with download instructions. |

plugins/ |

Plugin system: OpenAPI integrations, extensions, and sample plugins. | Examples live in plugins/examples/. |

share/lexicons/ |

Lexicon resources: dictionaries, transcription tables, localization files. | Version lexicons and cite the source. |

| Path | Description |

|---|---|

| App/src/assets/icons/IJAI-logo.png | Application logo |

| App/src/assets/icons/.gitkeep | # gonna be released |

| App/src/components/ChartCard.js | Chart visualization component |

| App/src/components/DataTable.js | Tabular data component |

| App/src/components/Header.js | Application header |

| App/src/components/Sidebar.js | Sidebar navigation |

| App/src/styles/dashboard.css | Dashboard styling |

| App/src/styles/material.css | Material Design overrides |

| App/index.html | Main HTML entry point |

| App/renderer.js | Electron renderer process |

| App/main.js | Electron main process |

| App/preload.js | Secure preload bridge |

| App/README.md | Documentation for app module |

| App/electron-builder.yml | Build configuration (electron-builder) |

| App/forge.config.js | Forge build configuration |

| App/package.json | NPM dependencies and scripts |

| IJAI-configs/assistant.conf.yaml | Assistant runtime configuration |

| IJAI-configs/models.conf.json | Model registry definitions |

| IJAI-configs/policies.yaml | Policy rules |

| dataset/llm/set1/doc.md | LLM training notes |

| dataset/llm/set2/.gitkeep | # gonna be released |

| dataset/llm/set8/doc.md | Supplemental dataset |

| dataset/llm/articles_names.txt | Corpus index of articles |

| dataset/llm/doc.md | General dataset description |

| dataset/stt/book1.txt | STT training text |

| dataset/tts/voice1_set/.gitkeep | # gonna be released |

| dataset/tts/voice2_set/.gitkeep | # gonna be released |

| dataset/tts/book1.txt | TTS training corpus |

| dataset/important.jl | Julia dataset file |

| dataset/magnificent.asm | Assembly dataset (experimental) |

| dataset/examples/Текстовый документ.txt | # gonna be released |

| dataset/files/info/Текстовый документ.txt | Metadata resources |

| models/codellm/README.md | Model documentation |

| models/codellm/codellama.py | Python integration |

| models/codellm/installer.sh | Installer script |

| models/ollama-deepseekr1:8b/README.md | Model documentation |

| models/ollama-deepseekr1:8b/deepseekr1.py | Python integration |

| models/ollama-deepseekr1:8b/installer.sh | Installer script |

| models/llm/gpt-neo/add_info.md | Additional notes |

| models/llm/gpt-neo/gptneo.py | GPT-Neo integration |

| models/llm/gpt-neo/merges.txt, vocab.json | Tokenizer files |

| models/llm/gpt-neo/special_tokens_map.json | Special tokens |

| models/llm/gpt-neo/tokenizer.json, tokenizer_config.json | Tokenizer configs |

| models/llm/gpt2-medium/config.json | Model configuration |

| models/llm/gpt2-medium/gpt2m.py | GPT-2 medium integration |

| models/llm/gpt2-medium/tokinizer.json | Tokenizer config (naming typo) |

| models/ollama-llama3/DISCLAIMER.md | Usage disclaimer |

| models/ollama-llama3/installer.sh | Installer script |

| models/ollama-llama3/llm3.py | Integration script |

| models/phi3mini/README.md | Model documentation |

| models/phi3mini/added_tokens.json | Model tokenizer additions |

| models/phi3mini/config.json | Model configuration |

| models/phi3mini/configuration_phi3.py | Model configuration script |

| models/phi3mini/generation_config.json | Generation parameters |

| models/phi3mini/model.safetensors | Model weights |

| models/phi3mini/modeling_phi3.py | Model architecture |

| models/phi3mini/script.py | Helper scripts |

| models/phi3mini/special_tokens_map.json | Special tokens map |

| models/phi3mini/tokenizer.json, tokenizer.model, tokenizer_config.json | Tokenizer files |

| models/stt/Coqui/init.py | Python package initialization |

| models/stt/Coqui/coqui_stt.py | STT integration script |

| models/stt/Silero/README.md | Model documentation |

| models/stt/Silero/init.py | Python package initialization |

| models/stt/Silero/silero_stt.py | STT integration script |

| models/stt/whisper-small/config.json, preprocessor_config.json, tokenizer.json | Whisper model configs |

| models/stt/whisper-small/whisper-small.py | Whisper integration script |

| models/tts/tts-small/Текстовый документ.txt | # gonna be released |

| models/vocoder/Текстовый документ.txt | # gonna be released |

| plugins/weather/manifest.json | Plugin manifest |

| plugins/weather/openapi.yaml | OpenAPI schema |

| share/lexicons/Текстовый документ.txt | # gonna be released |

| .gitignore | Git ignore rules |

| COMMERCIAL-LICENSE.md | Commercial license terms |

| CONTRIBUTORS.md | Contributor acknowledgments |

| LICENSE | Open source license |

| README.md | Main documentation |

| Model | Params | GPU (RTX 2080s) | CPU (i7-10700) |

|---|---|---|---|

| Phi-3 Mini | 3.8B | ~45 tok/s | ~6 tok/s |

| LLaMA 3 | 8B | ~22 tok/s | ~3 tok/s |

| DeepSeek R1 | 7B | ~25 tok/s | ~3.5 tok/s |

| CodeLLaMA | 7B | ~24 tok/s | ~3.2 tok/s |

| GPT-Neo | 2.7B | ~40 tok/s | ~5.5 tok/s |

| GPT-2 XL | 1.5B | ~60 tok/s | ~8 tok/s |

| Falcon-7B | 7B | ~26 tok/s | ~3.5 tok/s |

| Mistral-7B | 7B | ~28 tok/s | ~3.7 tok/s |

| Yi-6B | 6B | ~30 tok/s | ~4 tok/s |

| OPT-6.7B | 6.7B | ~23 tok/s | ~3 tok/s |

| StableLM-7B | 7B | ~22 tok/s | ~3 tok/s |

| Model / Folder | CPU | RAM | GPU | Notes |

|---|---|---|---|---|

| codellm | 4+ cores | 16 GB+ | Optional, recommended for 8B+ models | PyTorch / Ollama compatible |

| codellama | 4+ cores | 16 GB+ | NVIDIA GPU (RTX 2060+) for smooth inference | 8-bit/16-bit quantization recommended |

| ollama-deepseekr1:8b | 4+ cores | 16 GB+ | NVIDIA GPU for fast generation | Pretrained 8B model, uses Ollama runtime |

| llm/Falcon-7B | 4+ cores | 16 GB+ | GPU optional, faster with CUDA | TII Falcon, strong general LLM |

| llm/GPT-NeoX-20B | 8+ cores | 32 GB+ | High-end GPU (24 GB VRAM+) required | Very large model, slow on CPU |

| llm/Gemma | 4+ cores | 12 GB+ | GPU optional | Compact Google LLM |

| llm/LLaMA-2 | 4+ cores | 16 GB+ | GPU recommended | Meta LLaMA 2 family |

| llm/MPT | 4+ cores | 16 GB+ | GPU optional | MosaicML transformer family |

| llm/Mistral-7B | 4+ cores | 16 GB+ | GPU recommended | Highly efficient dense model |

| llm/OPT | 4+ cores | 16 GB+ | GPU optional | Meta OPT series |

| llm/Qwen-1.5 | 4+ cores | 16 GB+ | GPU optional | Multilingual Alibaba model |

| llm/StableLM | 4+ cores | 12 GB+ | GPU optional | StabilityAI open family |

| llm/Yi-1.5-6B | 4+ cores | 16 GB+ | GPU recommended | Bilingual efficiency |

| llm/gpt-j-6b | 4+ cores | 16 GB+ | GPU optional | EleutherAI GPT-J classic |

| llm/gpt-neo | 4+ cores | 12 GB+ | GPU optional | EleutherAI GPT-Neo |

| llm/gpt2-medium | 4+ cores | 8 GB+ | GPU optional | Classic GPT-2 medium |

| ollama-llama3/phi3mini | 4+ cores | 12 GB+ | GPU recommended | Small LLaMA3 variant |

| Model / Folder | CPU | RAM | GPU | Audio Requirements |

|---|---|---|---|---|

| stt/Coqui | 4+ cores | 8 GB+ | NVIDIA CUDA GPU recommended | WAV, 16 kHz, mono |

| stt/DeepSpeech | 4+ cores | 8 GB+ | Optional | WAV, 16 kHz, mono |

| stt/Faster-Whisper | 4+ cores | 8 GB+ | GPU recommended | WAV/OGG, 16 kHz |

| stt/Nemo ASR | 4+ cores | 8 GB+ | GPU strongly advised | WAV, 16 kHz |

| stt/Silero | 4+ cores | 8 GB+ | Optional | WAV, 16 kHz, mono |

| stt/Vosk | 4+ cores | 4 GB+ | Optional | WAV, 16 kHz, mono |

| stt/whisper-small | 4+ cores | 8 GB+ | GPU recommended | WAV/OGG, 16 kHz preferred |

| Model / Folder | CPU | RAM | GPU | Notes |

|---|---|---|---|---|

| tts/Parler-TTS | 4+ cores | 8 GB+ | GPU strongly recommended | HuggingFace Parler, realistic voices |

| tts/tts-small | 4+ cores | 8 GB+ | Optional, recommended for faster synthesis | Lightweight TTS |

| vocoder | 4+ cores | 8 GB+ | GPU recommended | Converts spectrograms to waveform |

Models included:

- LLM:

llm/gpt2-medium - STT:

stt/Silero - TTS:

tts/tts-small - Vocoder:

vocoder

Requirements:

| Resource | Recommended |

|---|---|

| CPU | 4 cores modern x86_64 |

| RAM | 8 GB |

| GPU (optional) | NVIDIA CUDA GPU (RTX 2060) for faster STT/TTS inference |

| Storage | ~1 GB for all models |

| Audio format | WAV, 16-bit PCM, mono, 16 kHz |

Models included:

- LLM:

codellm,codellama,ollama-deepseekr1:8b,llm/Falcon-7B,llm/GPT-NeoX-20B,llm/Gemma,llm/LLaMA-2,llm/MPT,llm/Mistral-7B,llm/OPT,llm/Qwen-1.5,llm/StableLM,llm/Yi-1.5-6B,llm/gpt-j-6b,llm/gpt-neo,llm/gpt2-medium,ollama-llama3/phi3mini - STT:

stt/Coqui,stt/DeepSpeech,stt/Faster-Whisper,stt/Nemo ASR,stt/Silero,stt/Vosk,stt/whisper-small - TTS:

tts/Parler-TTS,tts/tts-small - Vocoder:

vocoder

Requirements:

| Resource | Recommended |

|---|---|

| CPU | 8+ cores modern x86_64 |

| RAM | 16 GB+ (32 GB recommended for multiple LLMs) |

| GPU | NVIDIA CUDA GPU (RTX 3060+ recommended) for smooth inference across LLM, STT, and TTS |

| Storage | 20+ GB depending on models downloaded |

| Audio format | WAV/OGG, 16-bit PCM, mono, 16 kHz |

Models included:

- LLM:

llm/gpt2-medium - STT:

stt/Silero - TTS:

tts/tts-small - Vocoder:

vocoder

Requirements:

| Resource | Recommended |

|---|---|

| CPU | 4 cores modern x86_64 |

| RAM | 8 GB |

| GPU (optional) | NVIDIA CUDA GPU (e.g., RTX 2060) for faster STT/TTS inference |

| Storage | ~1 GB for all models |

| Audio format | WAV, 16-bit PCM, mono, 16 kHz |

Minimal set runs on CPU, but GPU improves transcription and TTS speed. Suitable for lightweight testing and small projects.

Models included:

- LLM:

codellm,codellama,ollama-deepseekr1:8b,llm/gpt-neo,llm/gpt2-medium,ollama-llama3/phi3mini - STT:

stt/Coqui,stt/Silero,stt/whisper-small - TTS:

tts/tts-small - Vocoder:

vocoder

Requirements:

| Resource | Recommended |

|---|---|

| CPU | 8+ cores modern x86_64 |

| RAM | 16 GB+ (32 GB recommended for multiple LLMs) |

| GPU | NVIDIA CUDA GPU (RTX 3060+ recommended) for smooth inference across LLM, STT, and TTS |

| Storage | 10+ GB depending on models downloaded |

| Audio format | WAV/OGG, 16-bit PCM, mono, 16 kHz |

Full set allows full functionality: large LLMs, multiple STT engines, and high-quality TTS. GPU is strongly recommended for smooth experience.

flowchart TB

%% === SYSTEM CHECK ===

SYS[System Profiler: CPU GPU RAM]

%% === OPTIMIZATION PIPELINE ===

OPT[Auto Configurator: Quantization + Model Selection]

BENCH[Benchmark Runner: Tokens per sec]

DEPLOY[Runtime Deployment: Optimized Models]

%% === RAW MODELS ===

subgraph RAW [Raw Models]

M1[CodeLLM or CodeLLaMA]

M2[DeepSeek R1]

M3[GPT-Neo and GPT-2]

M4[LLaMA-3]

M5[Phi-3 Mini]

M6[Whisper Small STT]

M7[TTS Small]

M8[Vocoder]

end

%% === DATASETS ===

subgraph DATA [Datasets]

D1[LLM corpora]

D2[STT transcripts]

D3[TTS voices]

end

%% === CONFIGS ===

subgraph CFG [Configuration Layer]

C1[assistant.conf.yaml]

C2[models.conf.json]

C3[policies.yaml]

end

%% === RUNTIME SERVICES ===

subgraph RUNTIME [Runtime Services]

MON[Monitoring and Logging]

SEC[Policy Engine]

API[Runtime API]

end

%% === UI & PLUGINS ===

subgraph UI [User Interface]

U1[Electron Desktop UI]

P1[Plugins: Weather etc]

end

%% === FLOWS ===

SYS --> OPT --> BENCH --> DEPLOY

%% DATA → RAW MODELS

D1 --> M1

D1 --> M2

D1 --> M3

D1 --> M4

D1 --> M5

D2 --> M6

D3 --> M7 --> M8

%% RAW MODELS → OPTIMIZER

M1 --> OPT

M2 --> OPT

M3 --> OPT

M4 --> OPT

M5 --> OPT

M6 --> OPT

M7 --> OPT

M8 --> OPT

%% OPTIMIZED DEPLOY → RUNTIME SERVICES

DEPLOY --> API

DEPLOY --> MON

DEPLOY --> SEC

%% CONFIGS

C1 --> API

C2 --> API

C3 --> SEC

%% UI LAYER

U1 --> API

U1 --> MON

U1 --> SEC

U1 --> P1

P1 --> API

- assistant.conf.yaml – Core assistant runtime configuration.

- models.conf.json – Central model registry.

- policies.yaml – Execution and safety policies.

Supported families include:

- CodeLLM – Code generation.

- DeepSeek R1 – Reasoning model.

- GPT-Neo & GPT-2 – General language models.

- LLaMA-3 – Open-source LLM.

- Phi-3 Mini – Lightweight transformer model.

- Whisper Small – Speech recognition.

- TTS Small + Vocoder – Speech synthesis.

Each model folder provides:

- Installer (

installer.sh) - Python integration (

*.py) - Tokenizer/configuration files

- Model weights (

.safetensors)

- LLM corpora – Markdown/text resources.

- STT corpora – Speech recognition text files.

- TTS corpora – Voice datasets, transcripts.

- Experimental – Julia (

.jl) and Assembly (.asm) files.

-

Weather plugin as reference implementation.

manifest.json– Plugin declaration.openapi.yaml– API specification.

# Clone repository

git clone https://github.com/your-org/IJAI.git

cd IJAI

# Install frontend dependencies

cd App

npm install

# Run in development

npm start

# Build production desktop app

npm run buildModel installation is handled by individual installer.sh scripts inside each model folder.

- Start the Electron application.

- Configure assistant and models via

IJAI-configs/. - Place datasets in

dataset/. - Install and load required models from

models/. - Extend functionality with

plugins/.

- Follow established coding standards.

- Submit pull requests for review.

- See

CONTRIBUTORS.mdfor acknowledgments.

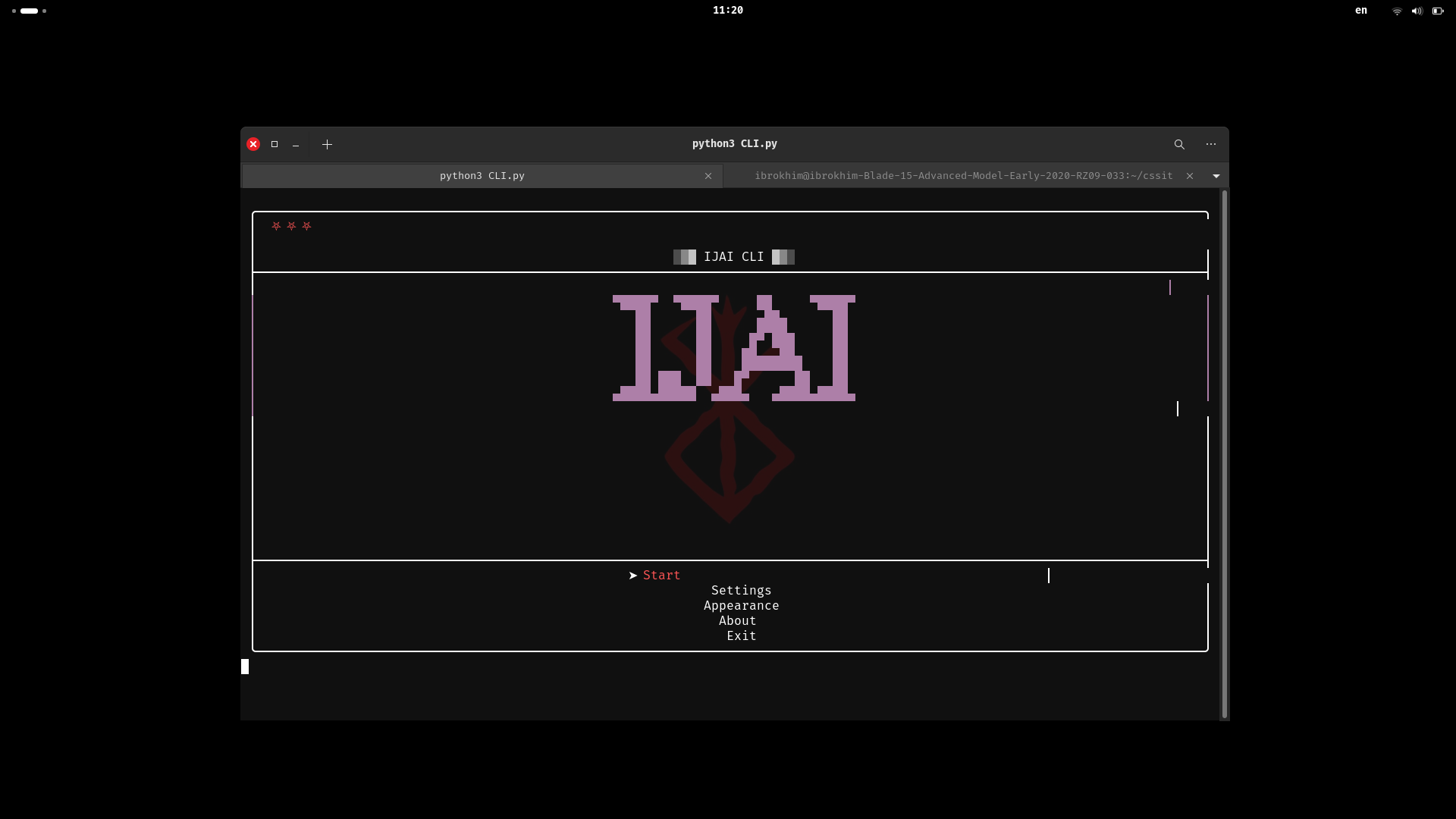

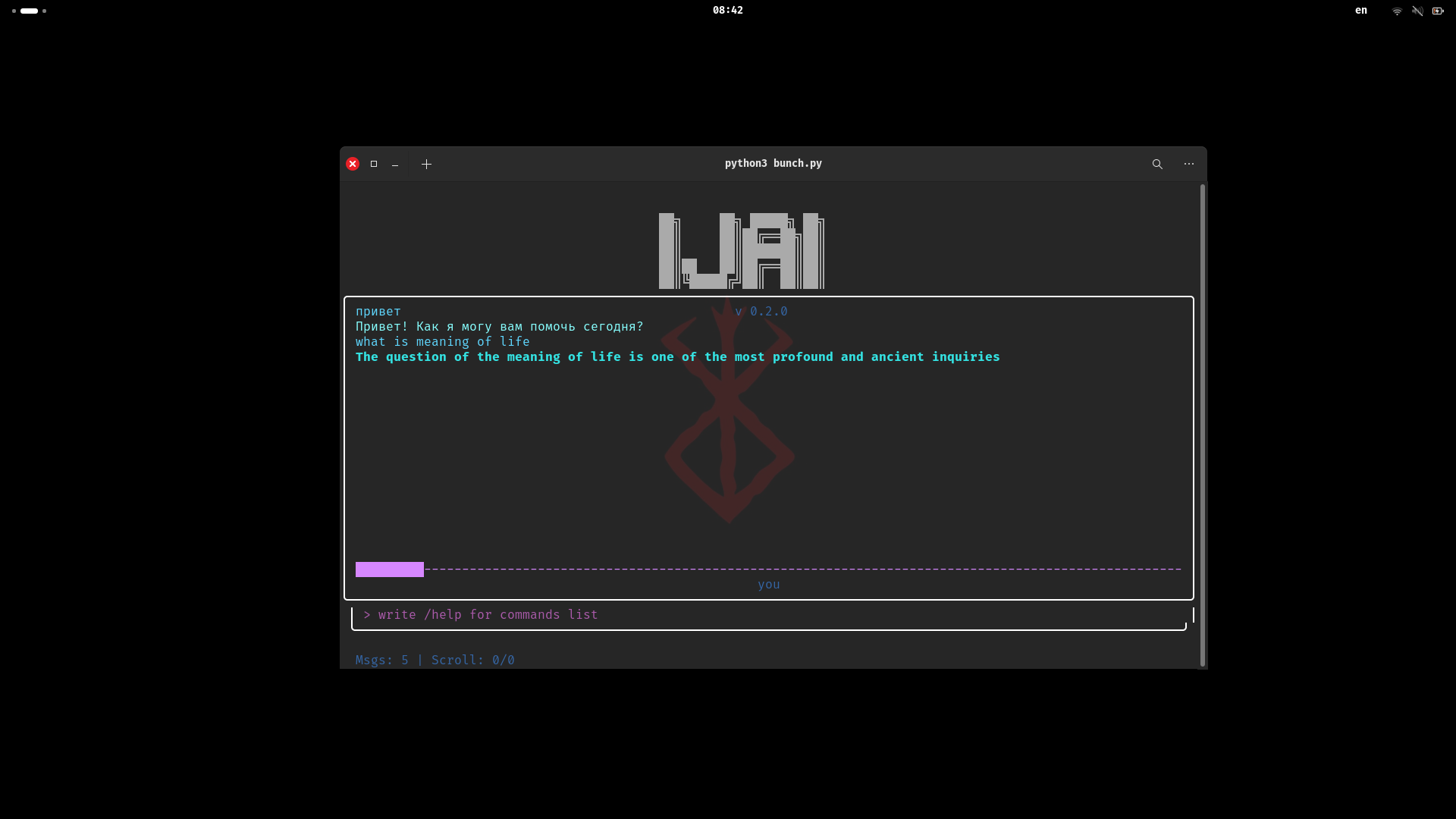

- Core Electron ( betta version )

- LLM integration (Phi-3 Mini, GPT-Neo, LLaMA-3, DeepSeek R1)

- STT integration (Whisper Small)

- TTS pipeline (TTS Small + Vocoder)

- Config-driven architecture (assistant, models, policies)

- Plugin framework (OpenAPI-based, example: Weather)

- Model installer scripts (

installer.sh) - Python integration for models (

*.pybindings) - Dataset ingestion (basic text/markdown corpora)

- Tokenizer and config handling (HF-compatible)

- Basic UI components (Sidebar, Header, DataTable, ChartCard)

- Build system (Forge + electron-builder)

- Model fine-tuning workflow (UI + CLI prototype)

- Interactive prompt playground for LLMs

- Voice cloning demo for TTS

- Speech-to-speech pipeline (STT → LLM → TTS)

- Minimal plugin marketplace (manual install)

- Auto config saver on your flash drive

- GPU acceleration benchmarks (CUDA / ROCm)

- Model caching & optimized loading (disk + RAM)

- Dataset versioning & tagging

- CLI tool (

ijai-cli) for headless workflows - Enhanced error logging & monitoring dashboard

- Extended plugin APIs (beyond Weather)

- Cloud sync & model sharing

- Advanced policy engine (safety, filtering, sandboxing)

- Fine-tuning UI (drag-and-drop datasets)

- Multi-language UI (EN, RU, etc.)

- Integration with external APIs (translation, search, etc.)

- Plugin marketplace (in-app browsing & install)

- Mobile companion app (view results, run lightweight tasks)

- Open Source License:

AGPLv3.0 - Commercial License:

COMMERCIAL-LICENSE.md

All models, datasets, and configurations provided in this repository are released strictly for research and educational purposes.

By using this project, you agree to follow these rules:

-

❌ No malicious usage — It is strictly forbidden to use IJAI or any of its models for harmful purposes, including but not limited to:

- spreading disinformation,

- generating hateful or violent content,

- surveillance or harassment,

- assisting in illegal activities.

-

❌ No malicious fine-tuning — Fine-tuning IJAI models on datasets intended for harmful, discriminatory, or illegal applications is prohibited.

-

✅ Allowed usage — You may use, extend, and fine-tune the models for positive, ethical, and constructive purposes such as:

- research,

- accessibility,

- education,

- productivity,

- creativity.

Important: Any violation of these policies voids your right to use or redistribute this project under its license.

We trust the open-source community to act responsibly and improve IJAI in ways that benefit everyone.

You can support me via crypto, Steam trade or Click. Any help is appreciated!

| Currency | Network | Address |

|---|---|---|

| USDT | TRC20 | TPutzJ12Bs4jAPLT9rkQhvg6PdwHhQfJVB |

You can send via Click using the app:

Thank you for your support. It helps me keep working on projects.

Prebuilt model weights and installers are available via the official distribution channel: Telegram.

The project is currently in the development stage, with core functionalities being implemented and tested.

Additional features, optimizations, and refinements are planned for subsequent development phases.